jedediyah.github.io/atmim2024

AI is not what you think it is

unless you think it is hot garbage

Jedediyah Williams, PhD

Belmont High School

ATMIM 2024 Spring Conference: All Students Deserve a Piece of Pi

March 14, 2024

March 14, 2024

Hi! I'm Jed

Hi! I'm Jed

- B.S. Computer Systems Engineering (1st gen!)

- Taught Physics, Astronomy, Algebra (2006-2010)

- Observations and modeling of exoplanets at Maria Mitchell Observatory

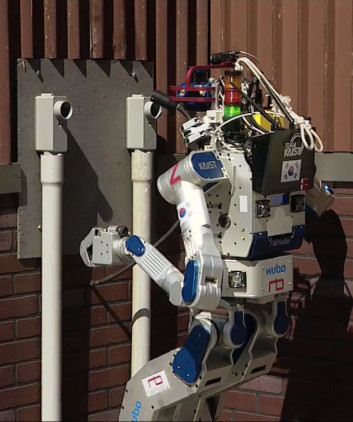

- Ph.D. Computer Science / Robotics

- NSF Graduate Teaching Fellowship in K-12 STEM Education

Culturally Situated Design Tools - DARPA Mobile Robotics Research

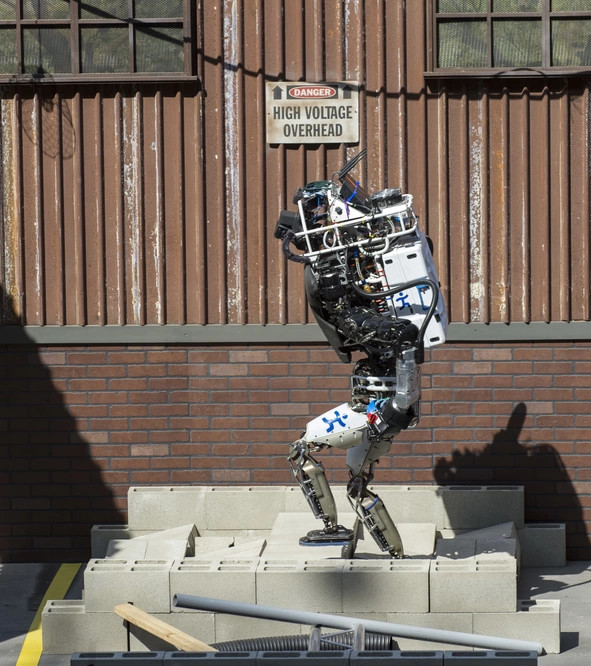

- Lockheed Martin, Team Trooper - DARPA Robotics Challenge

- Taught Math ( and some Comp Sci) (2014-2023)

- M.Ed. Curriculum and Teaching (I took too many credits)

- 2017 NASA Space Robotics Challenge (Mars), Finalist

- 2019 Massachusetts PAEMST, Mathematics

- 2021 NASA Space Robotics Challenge Phase 2 (Moon), Finalist and Top 5 Winner

- Teaching Robotics, Computer Science, Physics

There is too much to talk about!

|

|

|

|

|

|

tldr;

Math-based technologies are ubiquitous, often do not work, and are capable of broad and arbitrary harm.

- Educators should assess and use technology responsibly.

- When handing over the tools of mathematics, we are responsible as educators for teaching responsible use.

Motivations for this talk

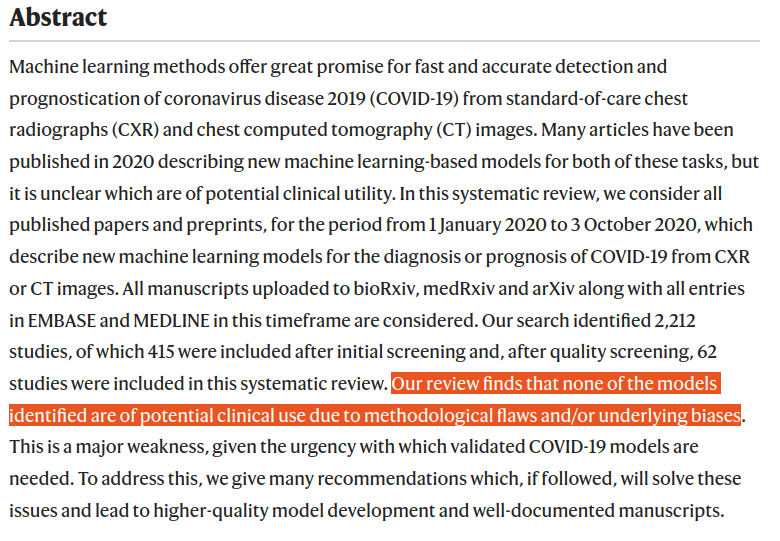

- The largely uncritical and positive view of AI being presented to math educators.

- A push to educate, not critical thinkers, but fateful consumers of surveillance technologies.

- Teacher optimism, yay!

Abridged Outline

- A brief history of AI

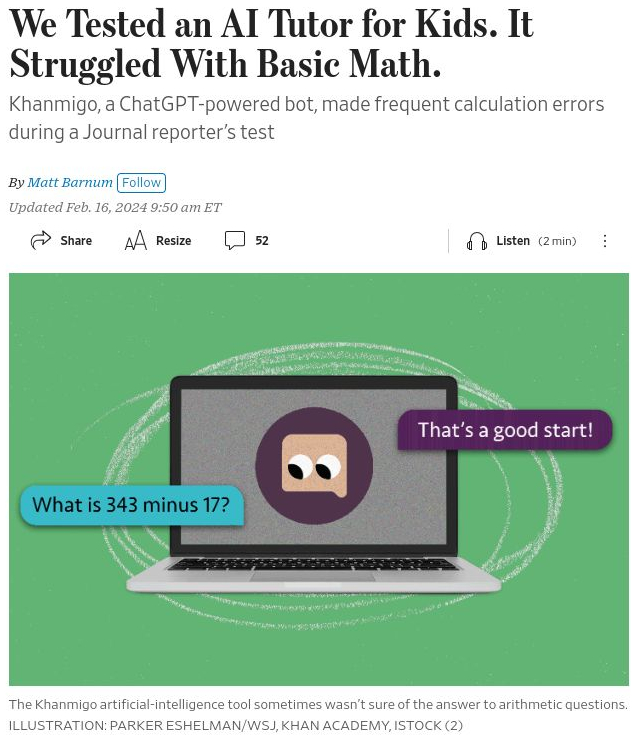

- Infinity examples of AI being garbage

- AI and our classrooms

- Remember Data Science?

- Math Neutrality and Other Mythical Creatures

- Culturally Situated Who What Now?

- NCTM? More like NCNTM... amiright?

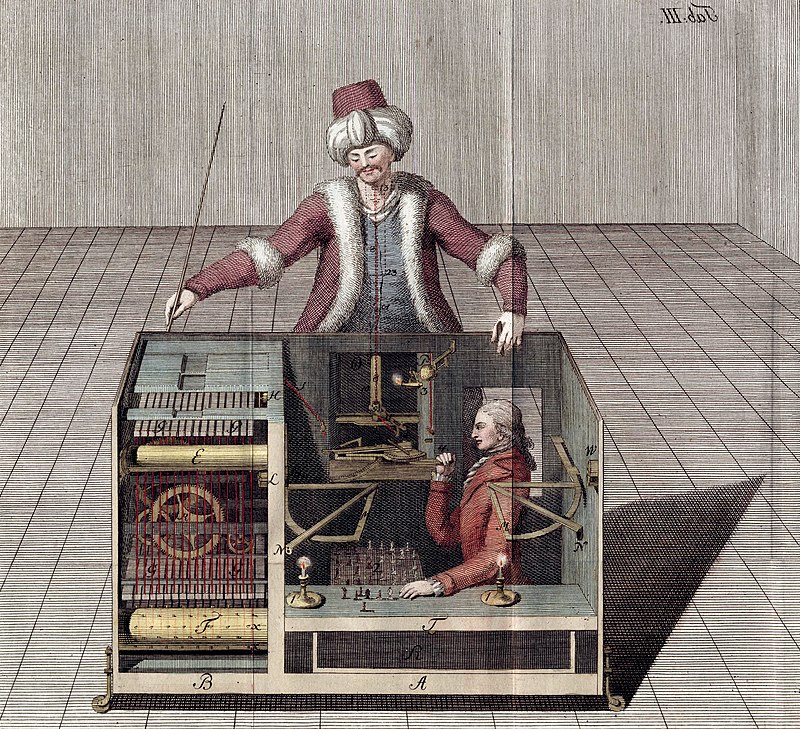

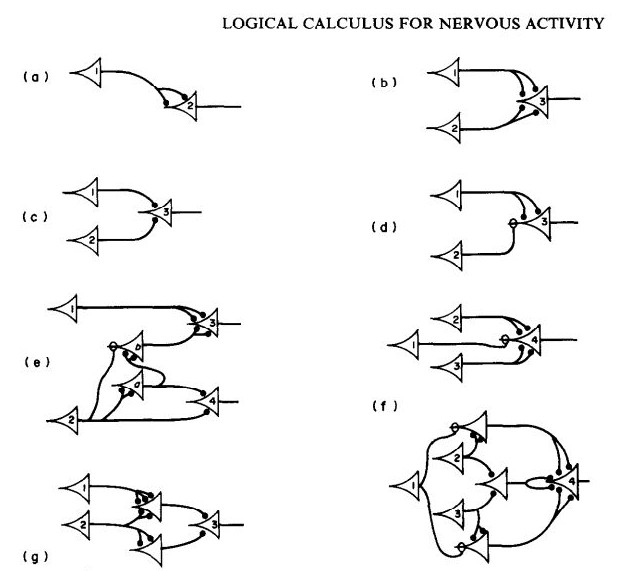

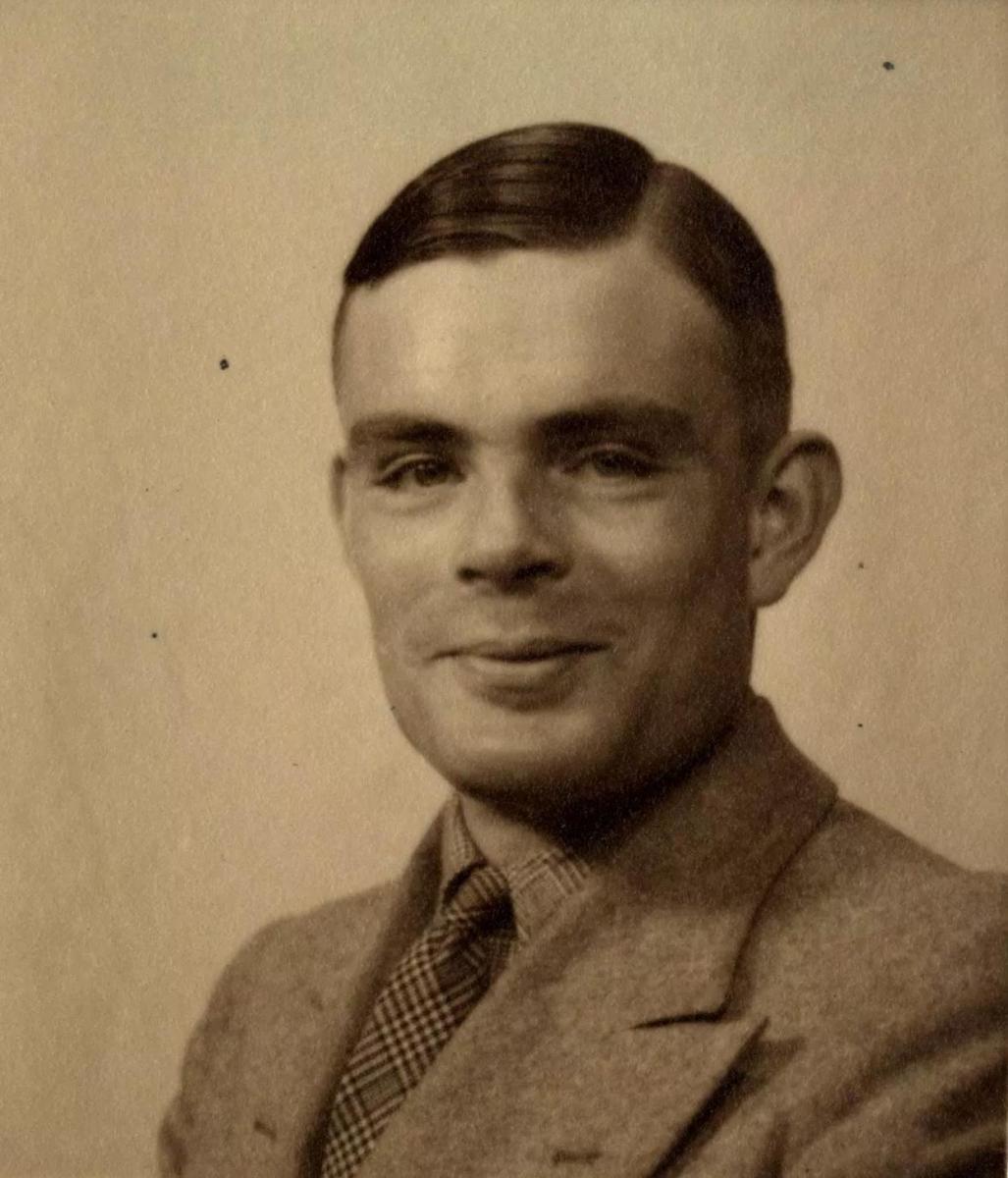

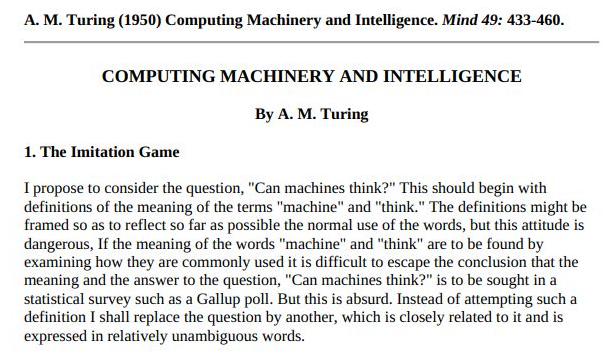

A brief and selective history of AI

1940

1945

1950

1955

1960

1965

1970

1975

1980

1985

1990

1995

2000

2005

2010

2015

2020

2025

A brief and selective history of AI

1940

1945

1950

1955

1960

1965

1970

1975

1980

1985

1990

1995

2000

2005

2010

2015

2020

2025

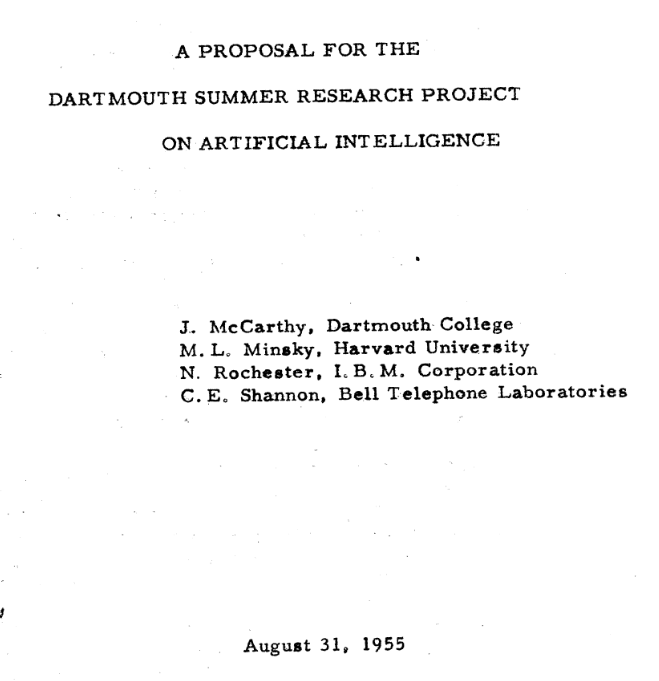

^

A brief and selective history of AI

1940

1945

1950

1955

1960

1965

1970

1975

1980

1985

1990

1995

2000

2005

2010

2015

2020

2025

^

A brief and selective history of AI

1940

1945

1950

1955

1960

1965

1970

1975

1980

1985

1990

1995

2000

2005

2010

2015

2020

2025

^

A brief and selective history of AI

1940

1945

1950

1955

1960

1965

1970

1975

1980

1985

1990

1995

2000

2005

2010

2015

2020

2025

^

A brief and selective history of AI

1940

1945

1950

1955

1960

1965

1970

1975

1980

1985

1990

1995

2000

2005

2010

2015

2020

2025

^

The Jetsons (1962). "Rosie the Robot".

A brief and selective history of AI

1940

1945

1950

1955

1960

1965

1970

1975

1980

1985

1990

1995

2000

2005

2010

2015

2020

2025

^

1964 - 1967. ELIZA. Joseph Weizenbaum.

A brief and selective history of AI

1940

1945

1950

1955

1960

1965

1970

1975

1980

1985

1990

1995

2000

2005

2010

2015

2020

2025

^

A brief and selective history of AI

1940

1945

1950

1955

1960

1965

1970

1975

1980

1985

1990

1995

2000

2005

2010

2015

2020

2025

^

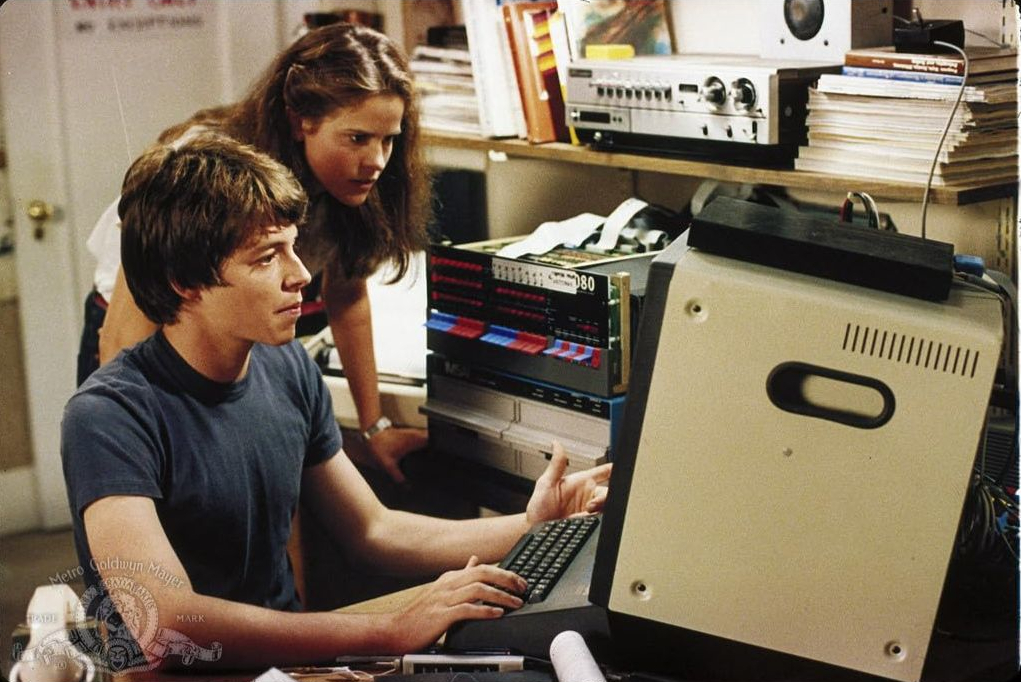

1983. Wargames.

A brief and selective history of AI

1940

1945

1950

1955

1960

1965

1970

1975

1980

1985

1990

1995

2000

2005

2010

2015

2020

2025

^

1984. The Terminator.

A brief and selective history of AI

1940

1945

1950

1955

1960

1965

1970

1975

1980

1985

1990

1995

2000

2005

2010

2015

2020

2025

^

1996. Kasparov defeats IBM's Deep Blue.

A brief and selective history of AI

1940

1945

1950

1955

1960

1965

1970

1975

1980

1985

1990

1995

2000

2005

2010

2015

2020

2025

^

1997. Deep Blue defeats Gary Kasporav.

A brief and selective history of AI

1940

1945

1950

1955

1960

1965

1970

1975

1980

1985

1990

1995

2000

2005

2010

2015

2020

2025

^

A brief and selective history of AI

1940

1945

1950

1955

1960

1965

1970

1975

1980

1985

1990

1995

2000

2005

2010

2015

2020

2025

^

The first time I was told, earnestly, by someone who "should know" that teachers won't exist in 5 years because of AI.

A brief and selective history of AI

1940

1945

1950

1955

1960

1965

1970

1975

1980

1985

1990

1995

2000

2005

2010

2015

2020

2025

^

2011. IMB's Watson beats Ken Jennings and Brad Rutter in Jeopardy!

A brief and selective history of AI

1940

1945

1950

1955

1960

1965

1970

1975

1980

1985

1990

1995

2000

2005

2010

2015

2020

2025

^

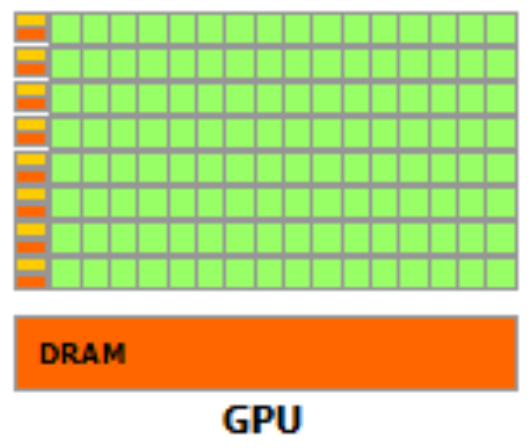

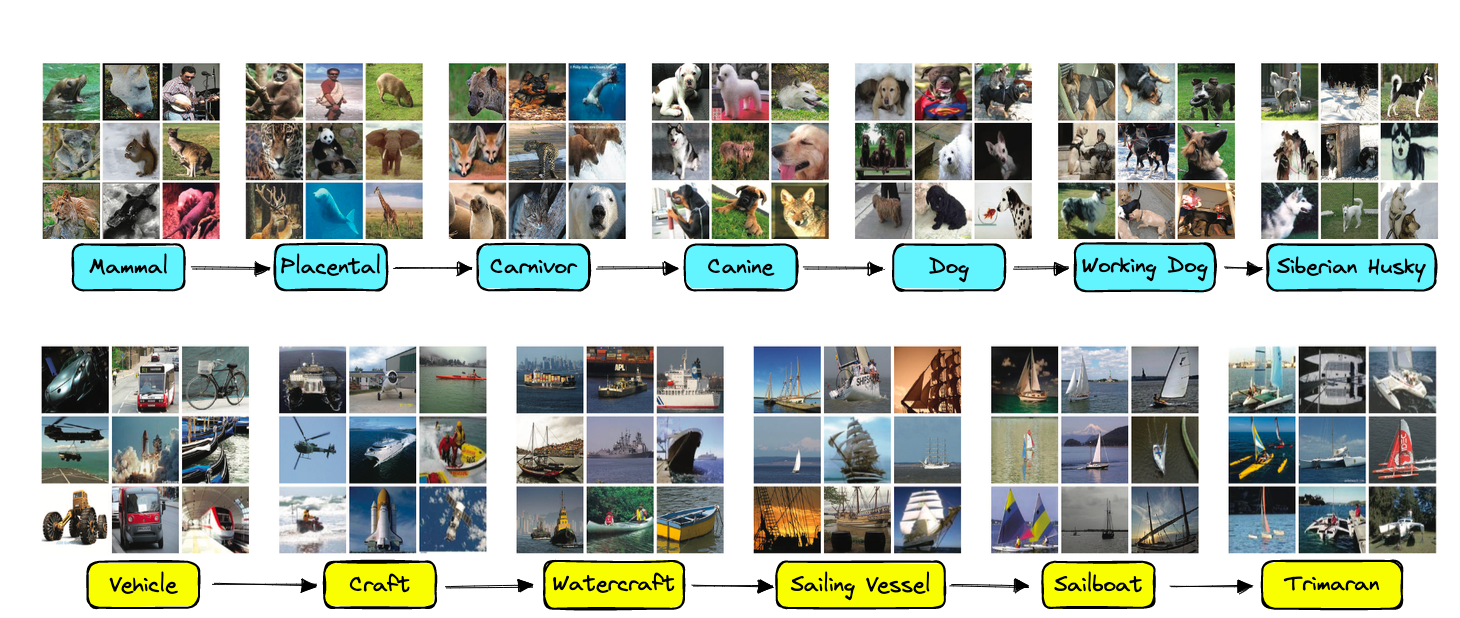

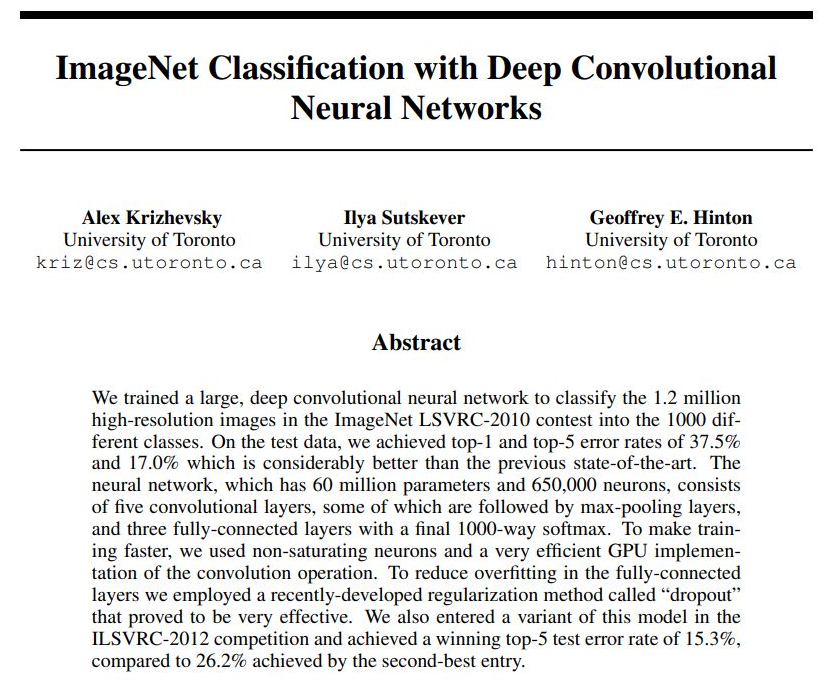

2012. AlexNet.

A brief and selective history of AI

1940

1945

1950

1955

1960

1965

1970

1975

1980

1985

1990

1995

2000

2005

2010

2015

2020

2025

^

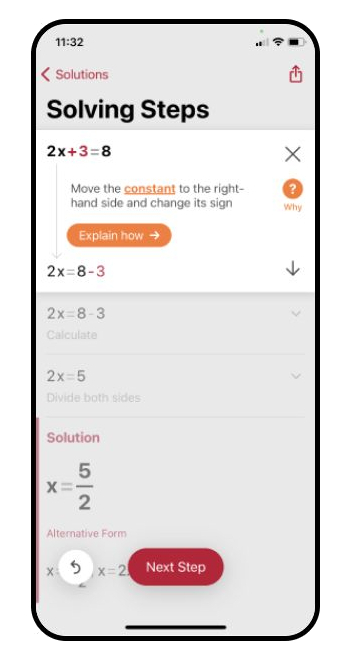

2014. PhotoMath.

A brief and selective history of AI

1940

1945

1950

1955

1960

1965

1970

1975

1980

1985

1990

1995

2000

2005

2010

2015

2020

2025

^

A brief and selective history of AI

1940

1945

1950

1955

1960

1965

1970

1975

1980

1985

1990

1995

2000

2005

2010

2015

2020

2025

^

A brief and selective history of AI

1940

1945

1950

1955

1960

1965

1970

1975

1980

1985

1990

1995

2000

2005

2010

2015

2020

2025

^

A brief and selective history of AI

1940

1945

1950

1955

1960

1965

1970

1975

1980

1985

1990

1995

2000

2005

2010

2015

2020

2025

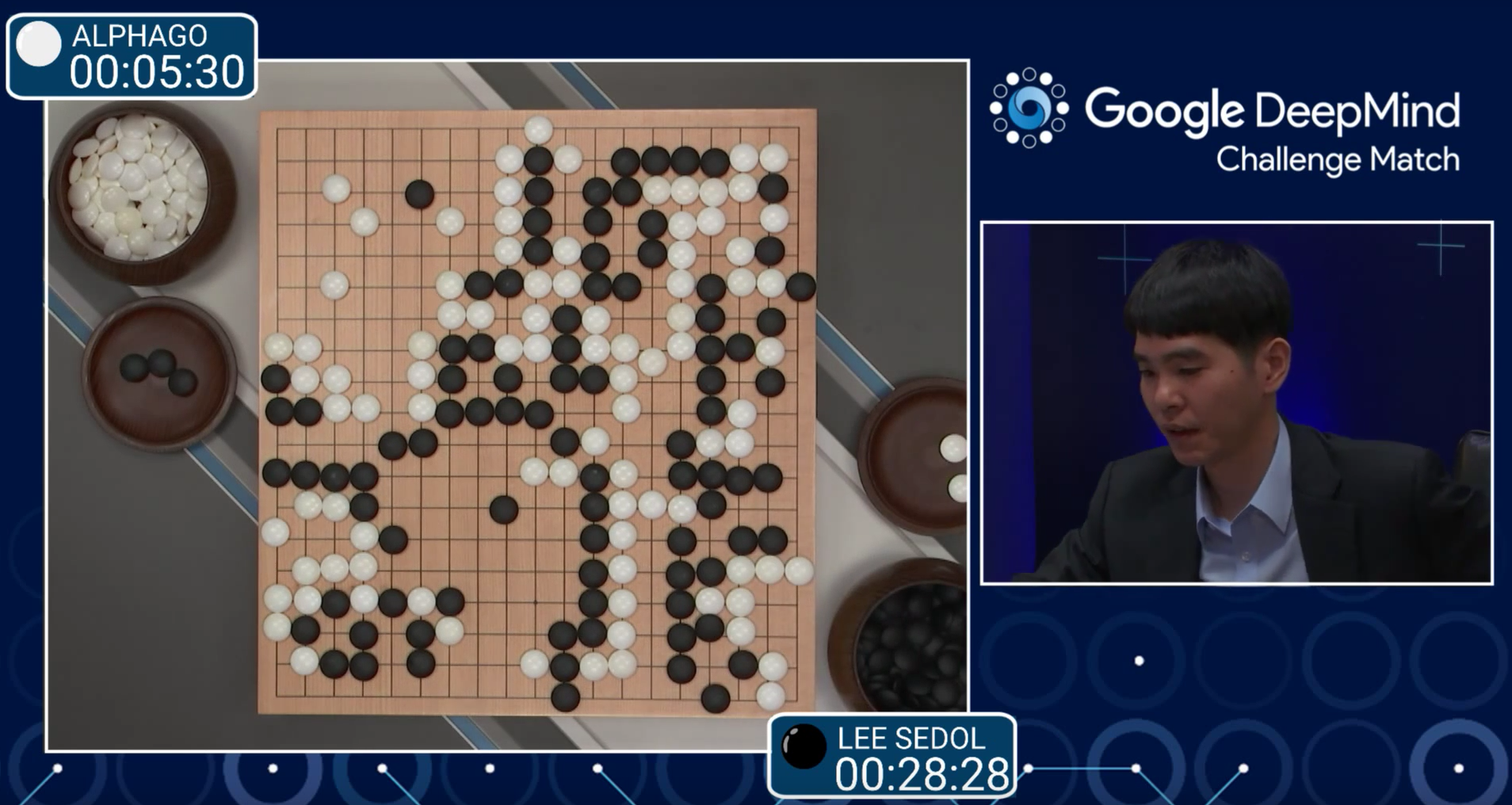

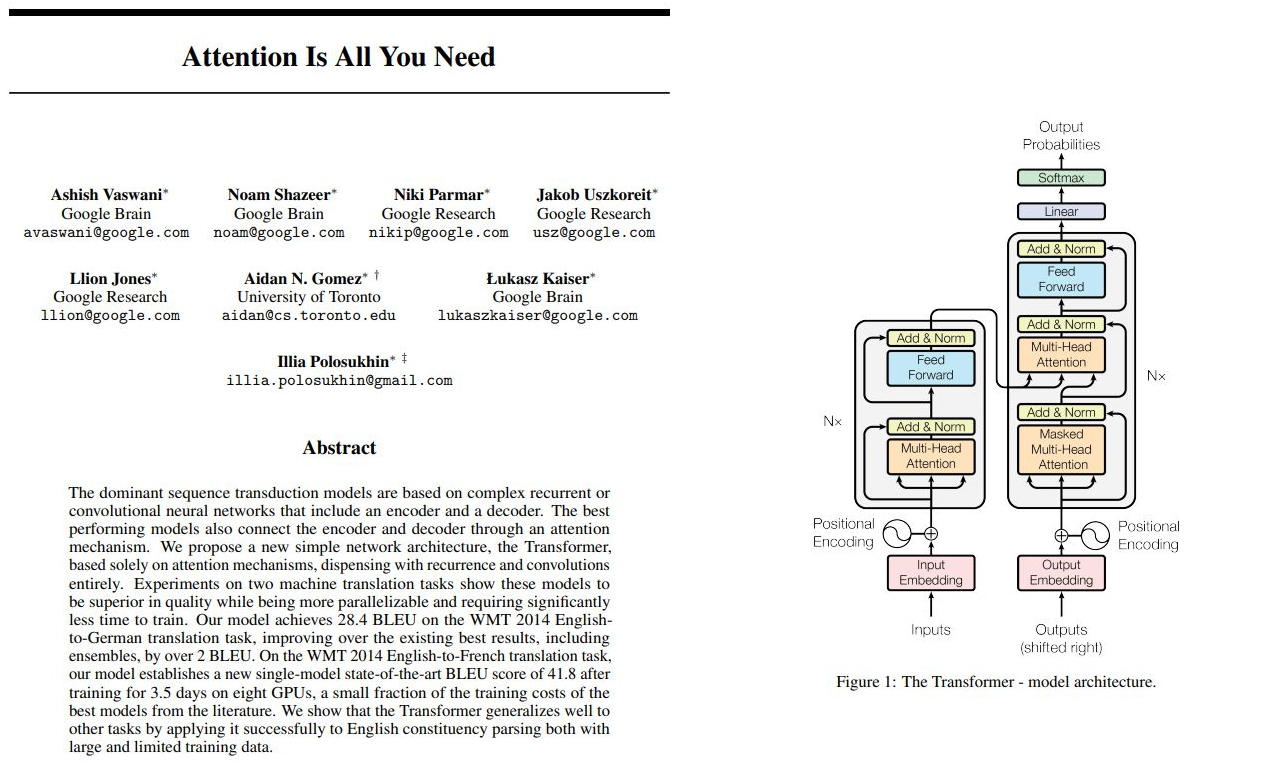

- AlphaFold (2020)

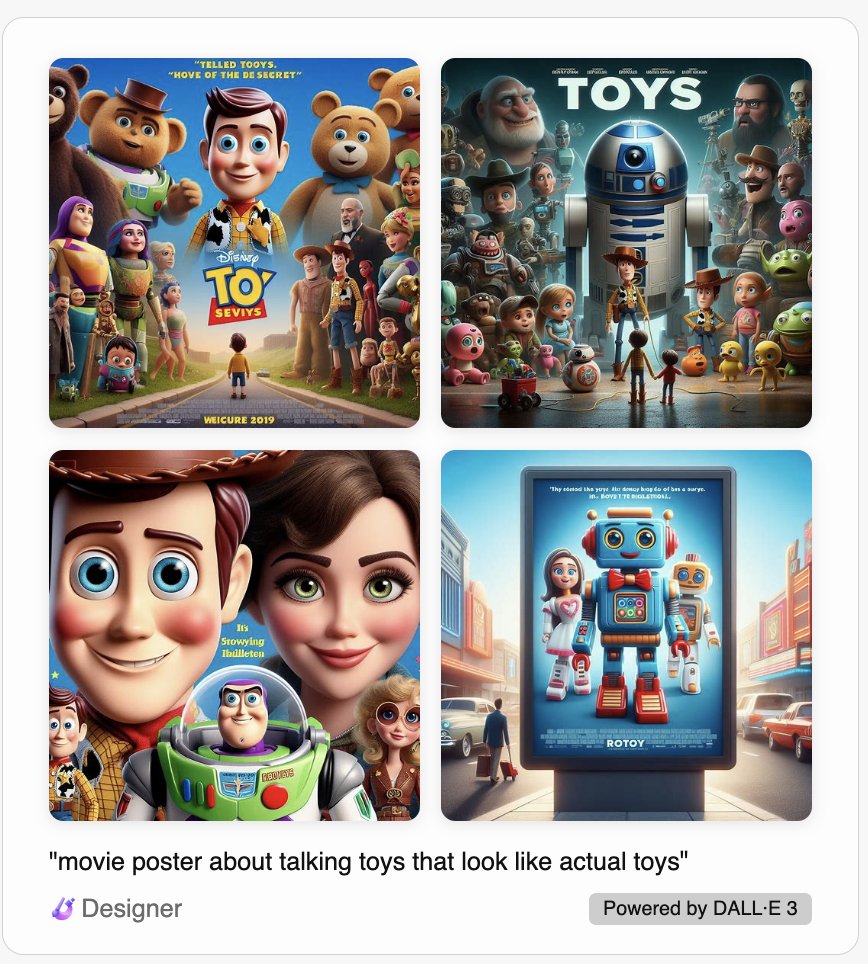

- DALL E (2021)

- Midjourney (2022)

- Stable Diffusion (2022)

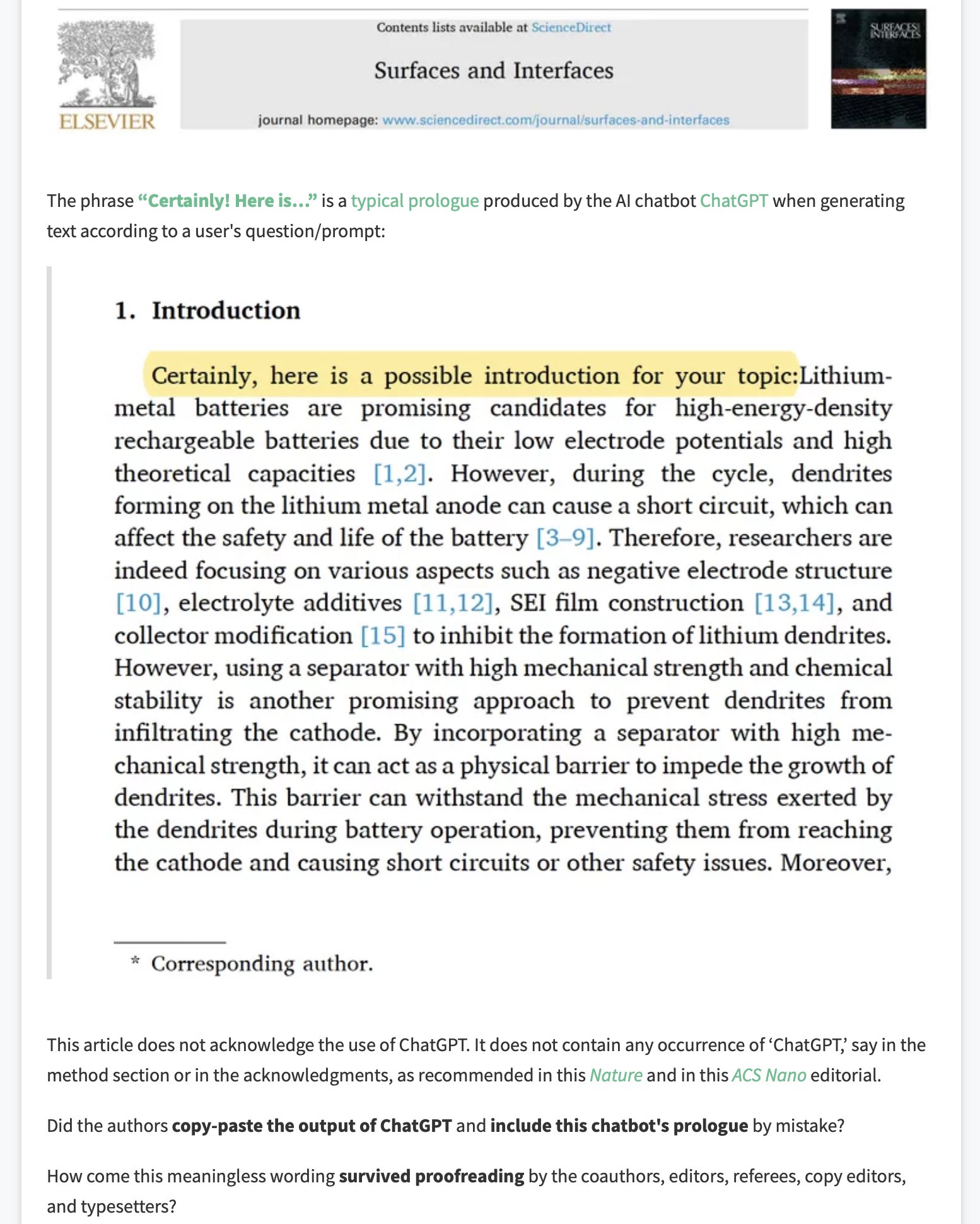

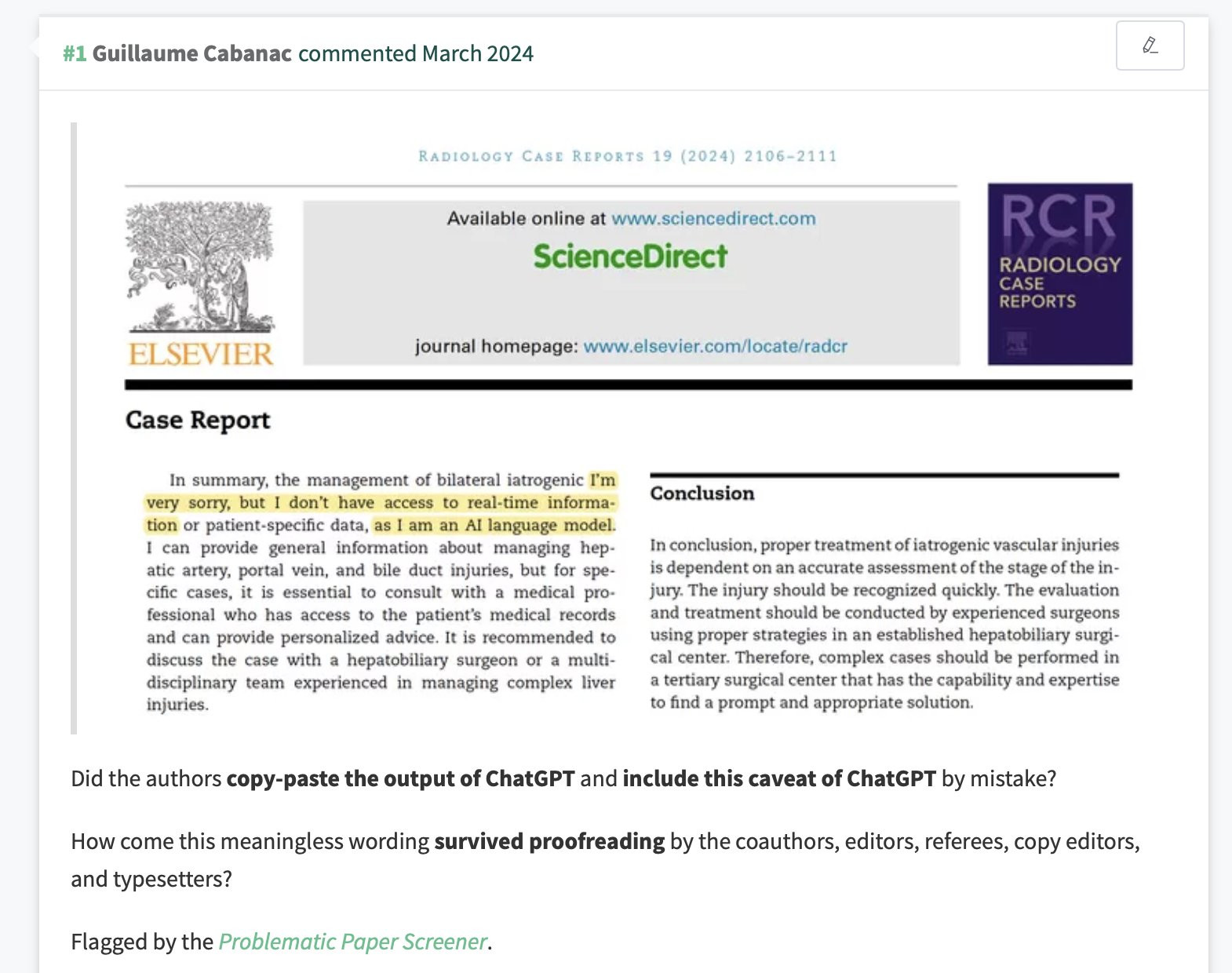

- Chat GPT (2022)

- LLaMA (2023)

- Mistral 7B (2023)

- Sora (2024)

- Gemma (2024)

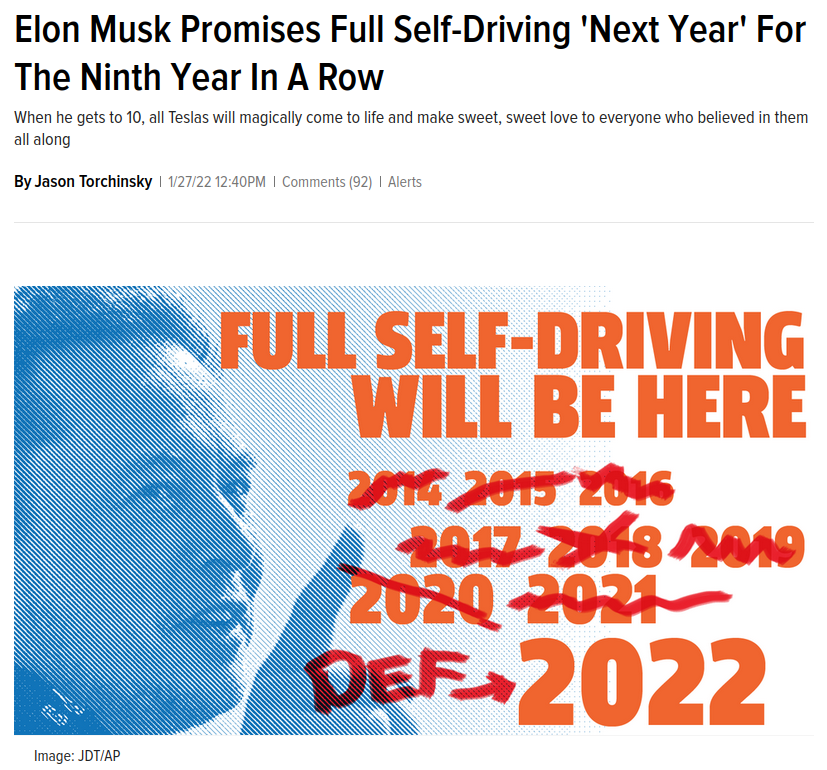

AI hype and shenanigans

- Stochastic Parrots paper

- Google firing their ethicists

- Constant bickering between cognitive scientists and tech bros

- Google Guy "leaking" that their AI is conscious (it is not)

- OpenAI's white lies about being "open" AI

- Musk sueing OpenAI for lying about being open

- OpenAI sharing emails with Musk showing he was in on it

- Open letter saying we need to stop AI immediately!

- People signing the letter while definitely not stopping

- Sam Altman firing

- Sam Altman re-hiring

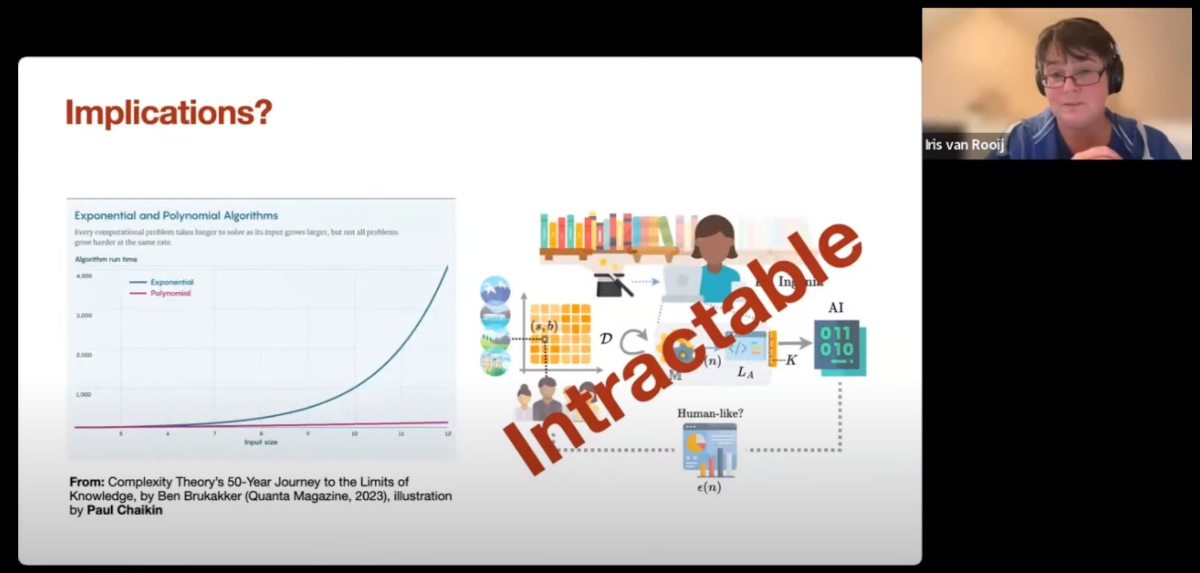

"We can be impressed by their performance on some inputs,

"We can be impressed by their performance on some inputs, but there are infinitely many inputs where they must fail."

- Iris van Rooij (26:02)

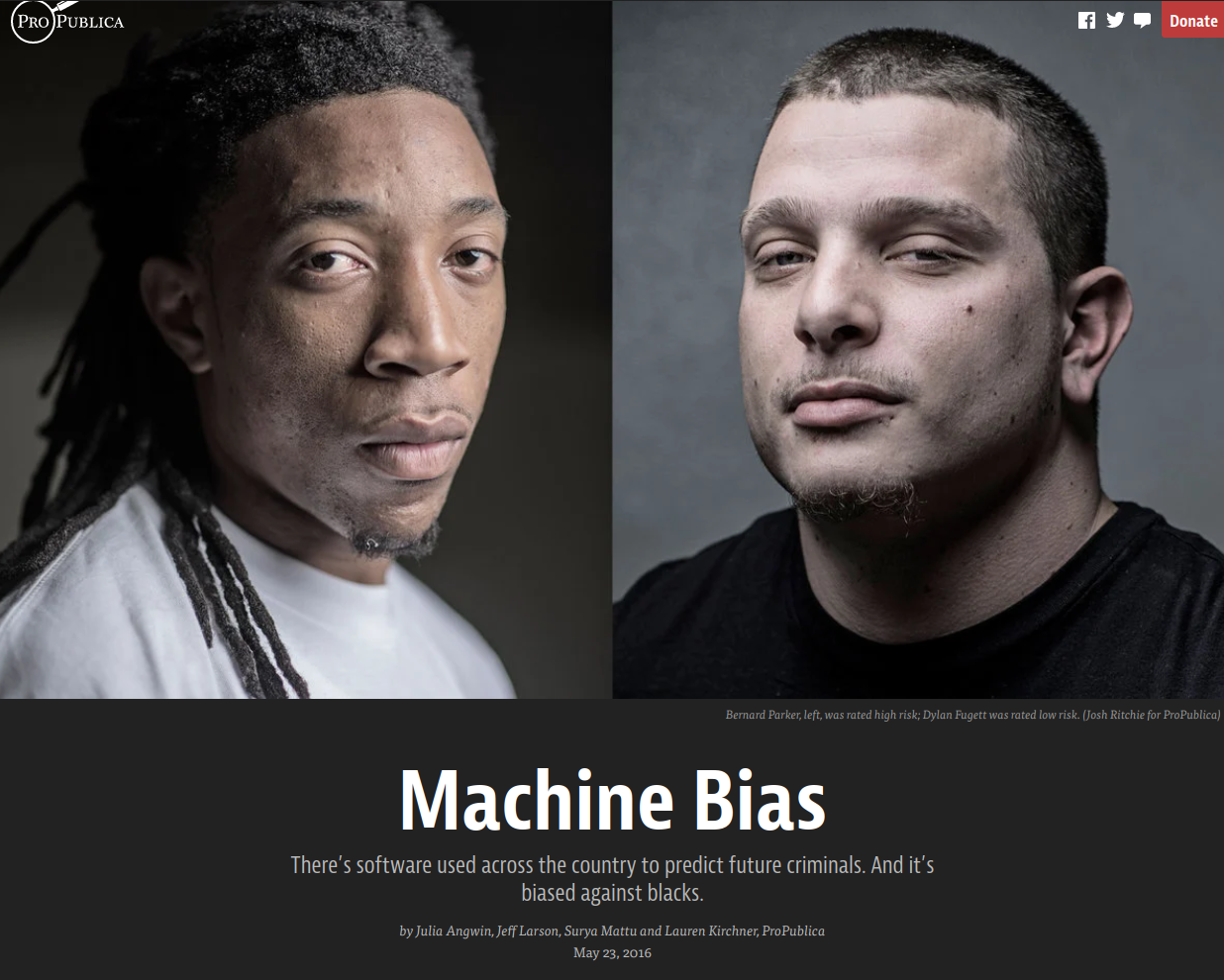

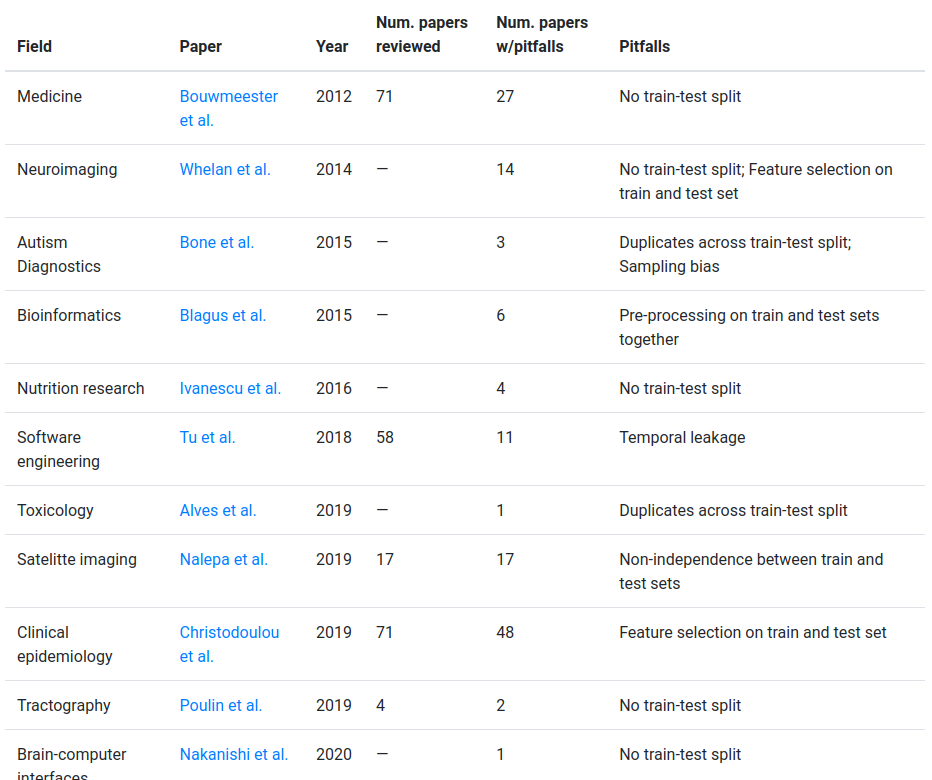

Are there any consequences of deploying broken technologies into critical decision making scenarios?

↓

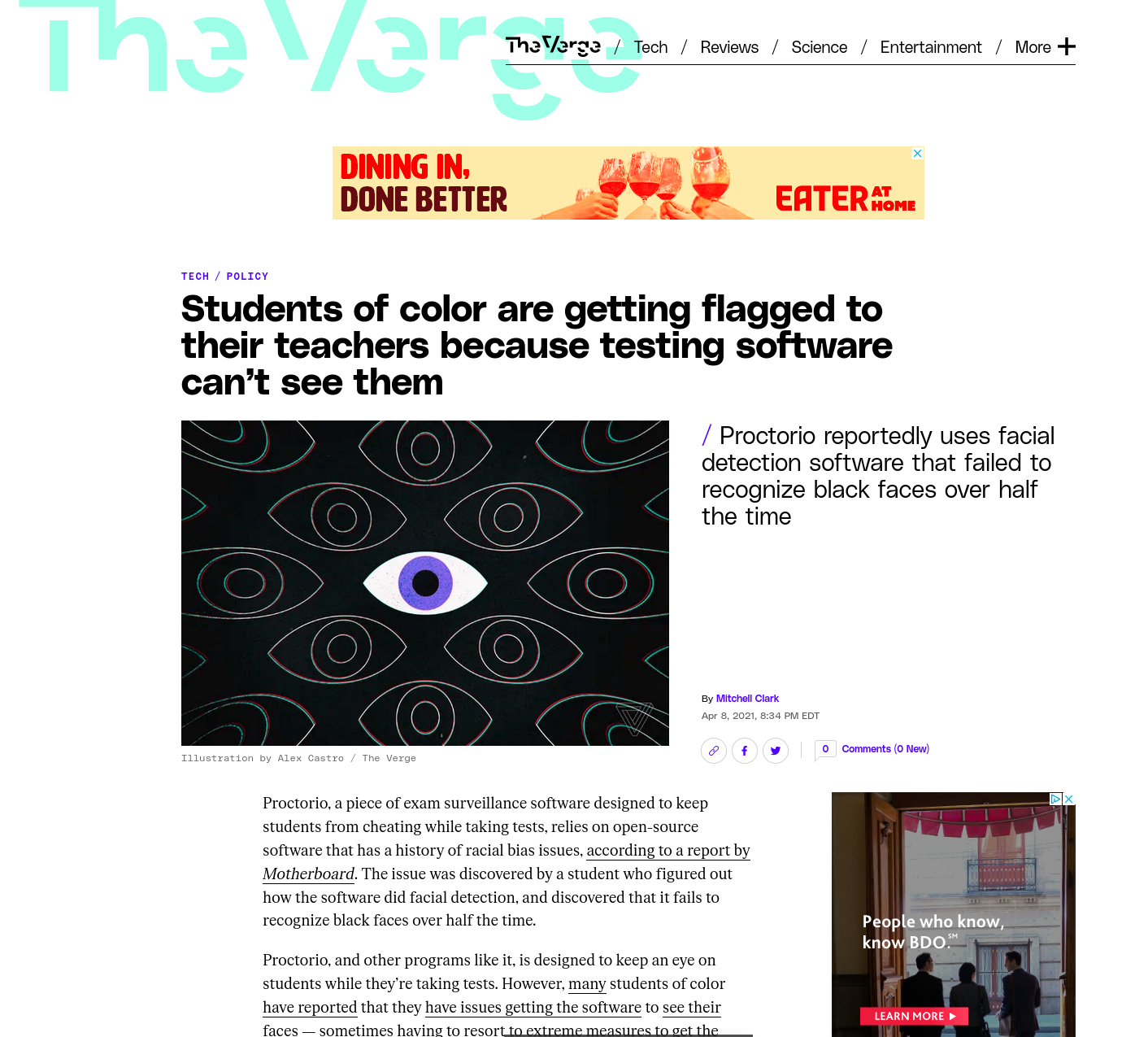

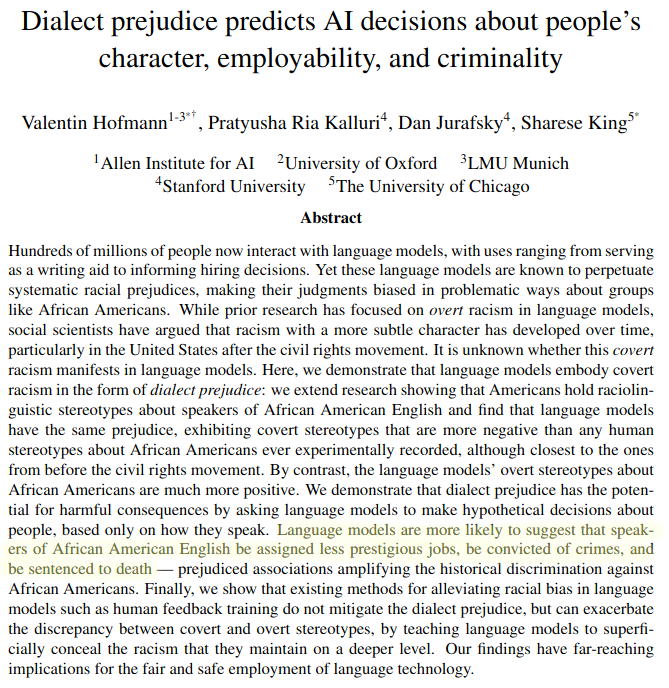

Some of the more well known harms

Standford Med blames algorithm

https://www.nytimes.com/2019/08/16/technology/ai-humans.html

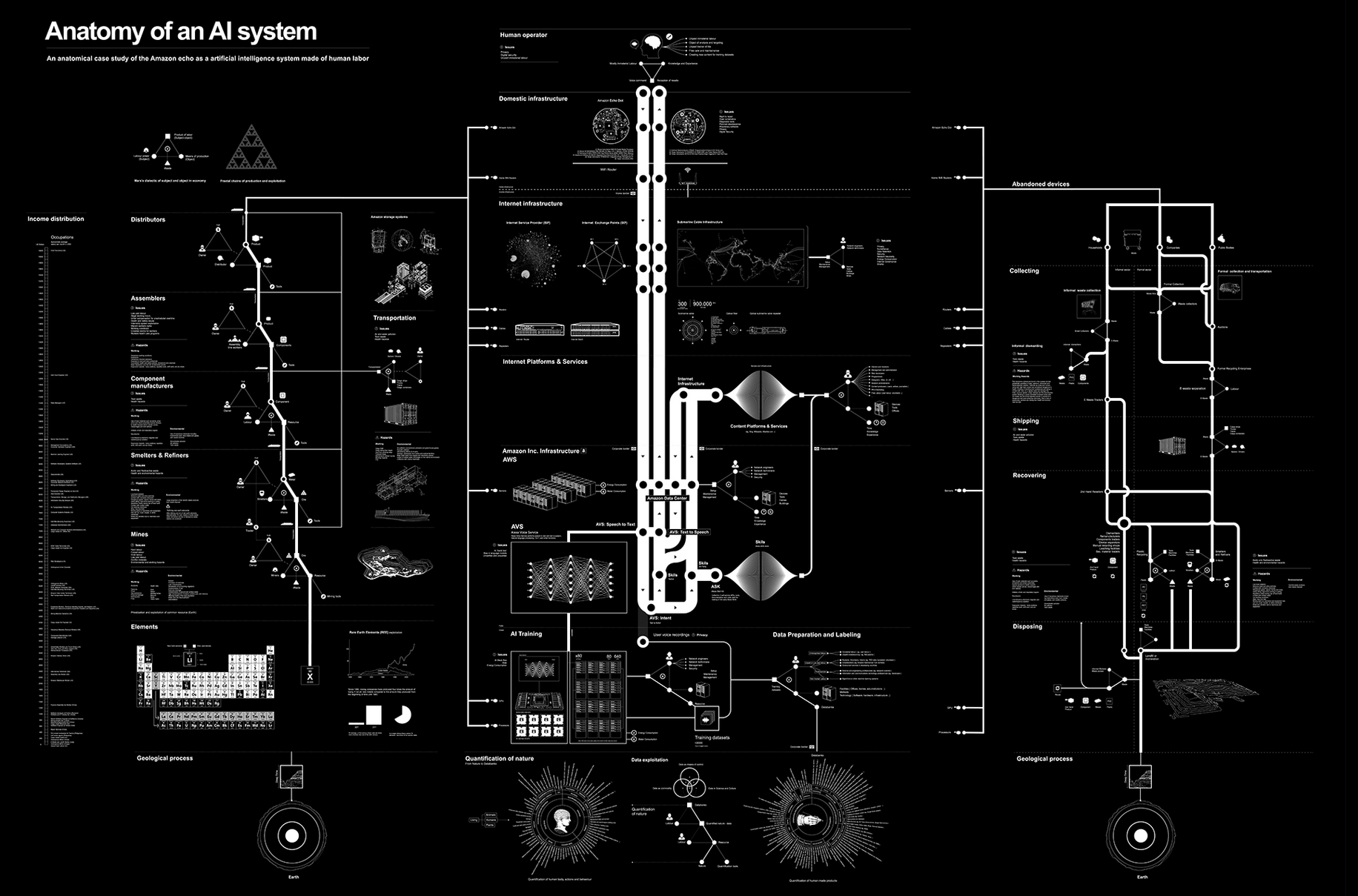

Anatomy of an AI system, Crawford and Joler

Anatomy of an AI system, Crawford and Joler

- Sun-ha Hong, Prediction as Extraction of Discretion

"Our success, happiness, and wellbeing are never fully of our own making. Others' decisions can profoundly affect the course of our lives...

Arbitrary, inconsistent, or faulty decision-making thus raises serious concerns..."

- Fairness and Machine Learning, Barocas, Hardt, and Narayanan

|

"Not only do many of the hiring tools not work, they are based on troubling pseudoscience and can discriminate" |

Hilke Schellmann tried the myInterview tool to check her "hiring score":

- Honest interview in English: 83%

- Reading a random wikipedia page in German: 73%

- Getting a robot voice to read her English: 79%

A quality assessment?

- Questions: not accessible

- Student responses: not accessible

- Student report: feature not available

- Parent report: feature not available

- National norms for comparison: not available

- Estimated time (4 exams \(\times\) 3): 22-28 hours

- Additional time given for assessment: 0 hours

- What does it cost?: "Not sure"

- What other HS uses it?: "There are some"

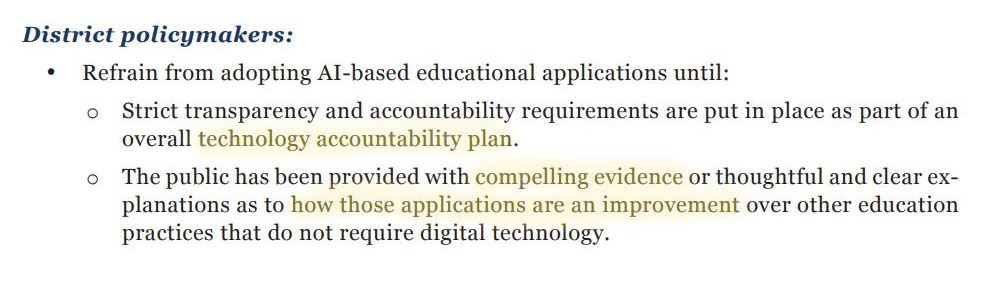

NWEA MAP, 2020 Reading Norms (achievement)

Page 4

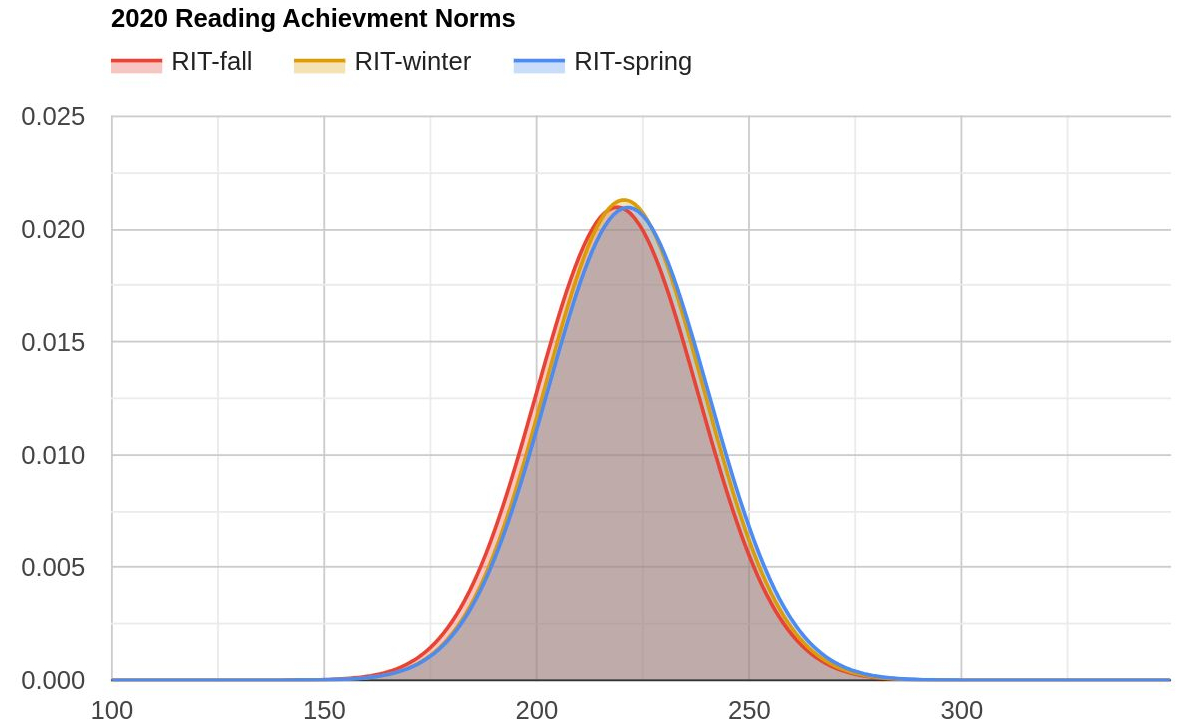

NWEA MAP, 2020 Reading Norms (growth)

Given the distribution for the 2020 Reading Growth Norms,

the percentage of students expected to show growth is %.

Assessment Takeaways

- It's easy for smart well-meaning people to do terrible things that hurt students.

- Vetting technologies is important and haphazard adoption / implementation hurts students.

- Sometimes, "don't do this" is the right choice.

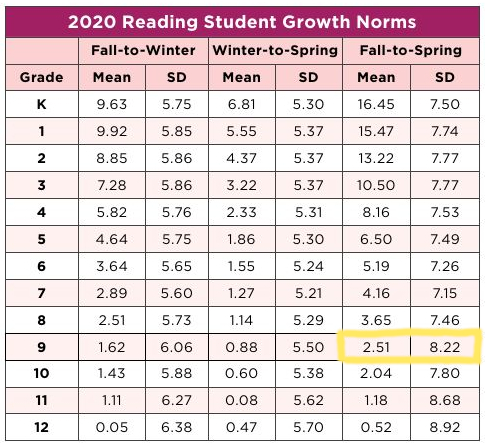

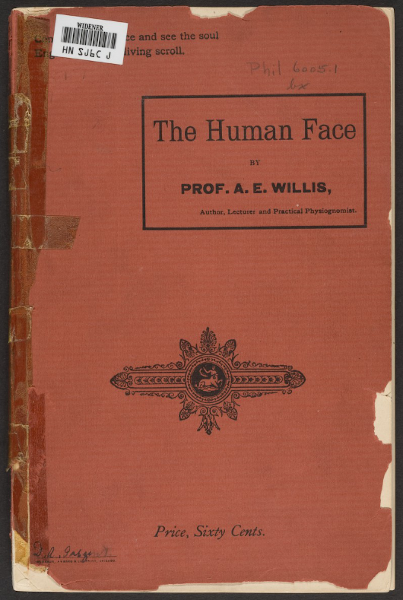

Let's play a game!

It's called phisiognomy!↓

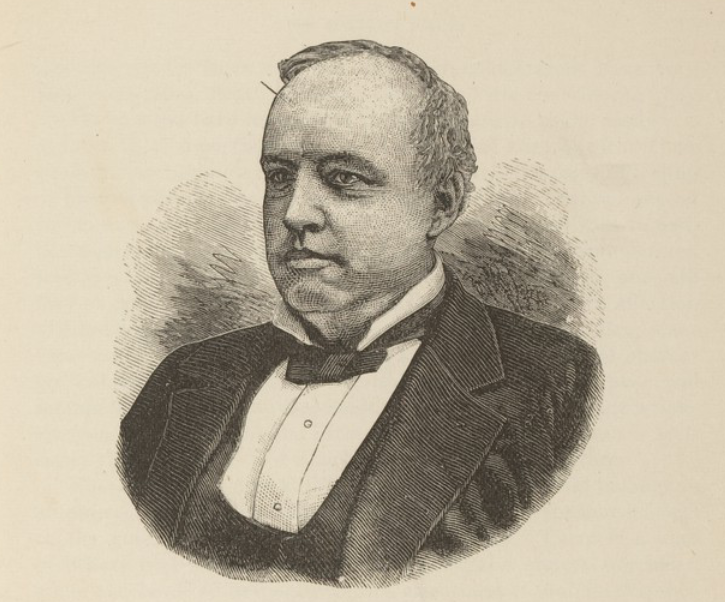

Good!

"He is a person of large vital force and chest capacity; great intellectual power and command of language...

Physically considered, he is a splendid animal"

Physically considered, he is a splendid animal"

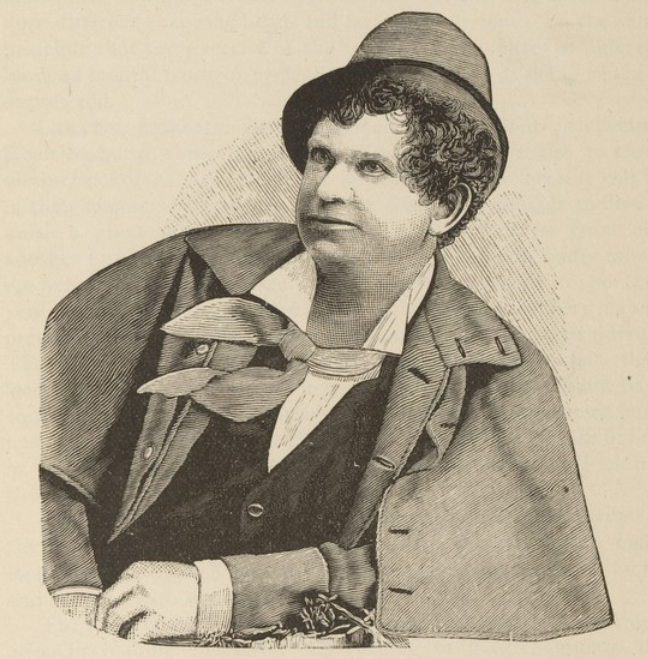

Bad :(

"Here is a nature that will want to receive money without having to work hard for it...

judging from this picture she has a free and easy style of conduct and not very conscientious as to right and wrong"

judging from this picture she has a free and easy style of conduct and not very conscientious as to right and wrong"

Bad :(

"Here is a mouth that looks beastly and the expression of the eyes is

anything but pure...

There is little good to be seen in this face;

it is indicative of a low,

coarse and gross type of character."

anything but pure...

There is little good to be seen in this face;

it is indicative of a low,

coarse and gross type of character."

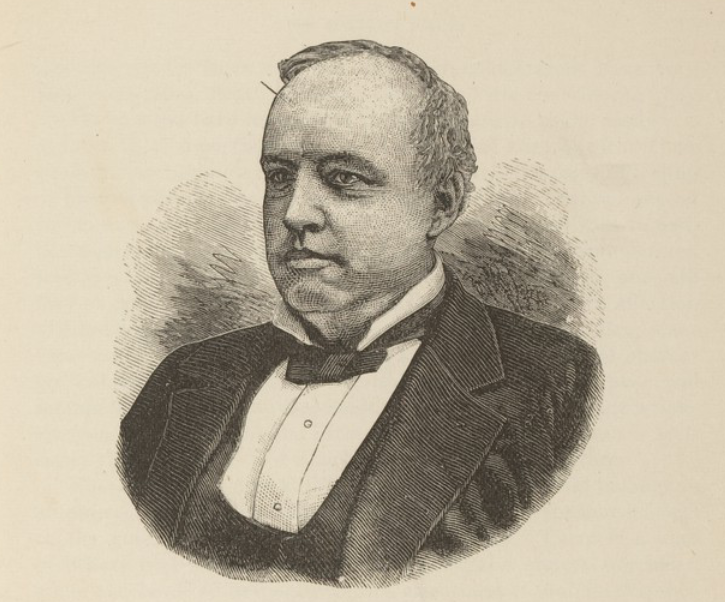

Good!

"What a noble countenance, and what a magnificent head in the top part where the moral faculties are located!

... The expression of the eyes is pure, wise and honest."

... The expression of the eyes is pure, wise and honest."

Good!

"The perceptive faculties are very largely developed in this gentleman. Observe the immense development directly over the nose and eyes, which imparts an observing, knowing, matter-of-fact and practical cast of mind."

Evil!!

"These small, black eyes are insinuating, artful, suggestive and wicked.

The face, though pretty, is mere animal beauty; nothing spiritual about it."

The face, though pretty, is mere animal beauty; nothing spiritual about it."

Wicked!!

"This is an artful, evasive, deceitful, lying, immodest and immoral eye; its very expression is suggestive of insincerity and wickedness...

The mouth also has a common and fast look."

The mouth also has a common and fast look."

Highway robber!

"An unprincipled looking face; the eyes have a sneaky appearance...

The upper part of the forehead in connection with the hair seems to say, I prefer to make my living by my wits..."

The upper part of the forehead in connection with the hair seems to say, I prefer to make my living by my wits..."

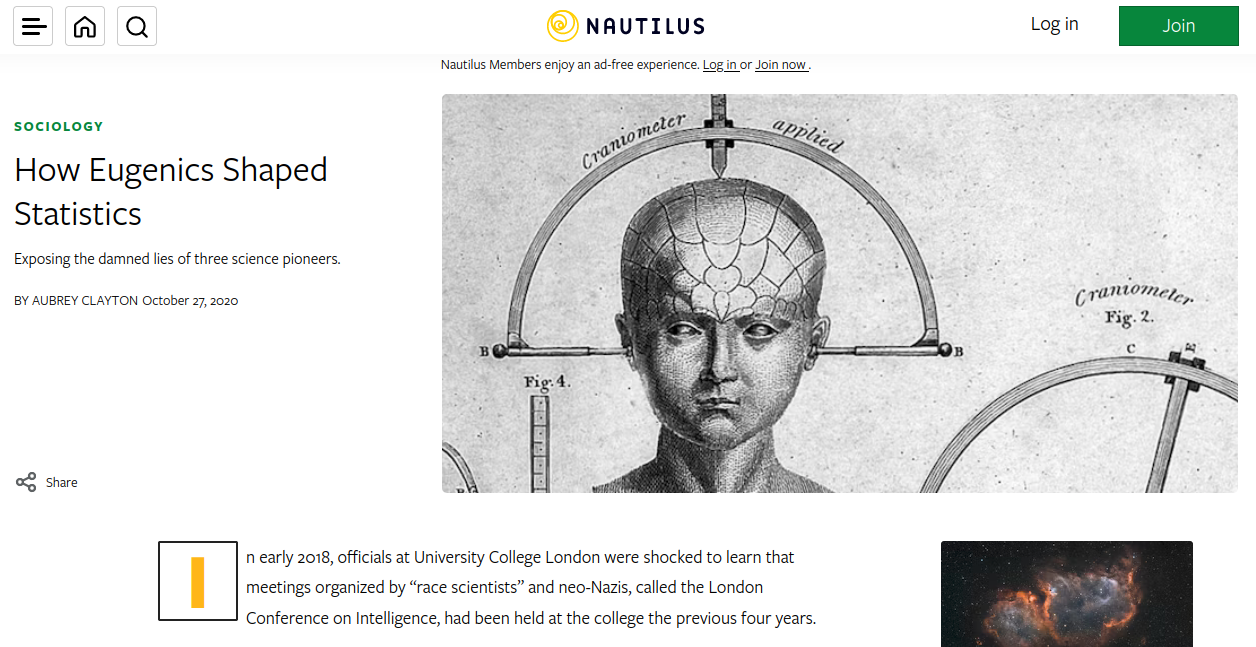

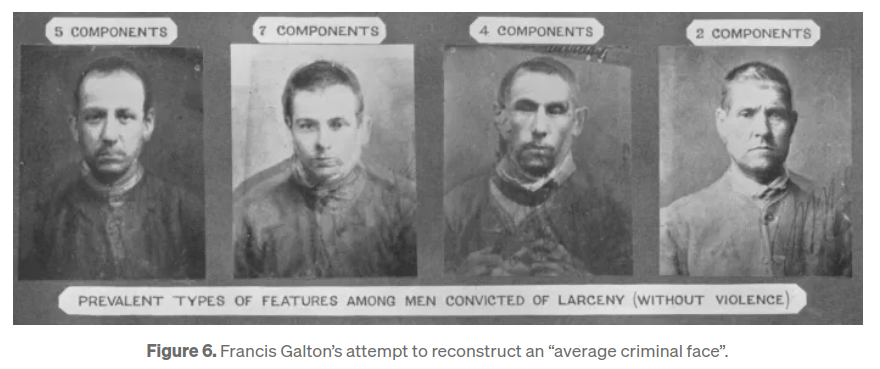

Galton coined the term "eugenics" in 1883.

Pearson was a student of Galton's and they worked with Fisher.

The three were pioneers of Statistics, which developed with their attempts to support bigotry on a scientific foundation.

Pearson was a student of Galton's and they worked with Fisher.

The three were pioneers of Statistics, which developed with their attempts to support bigotry on a scientific foundation.

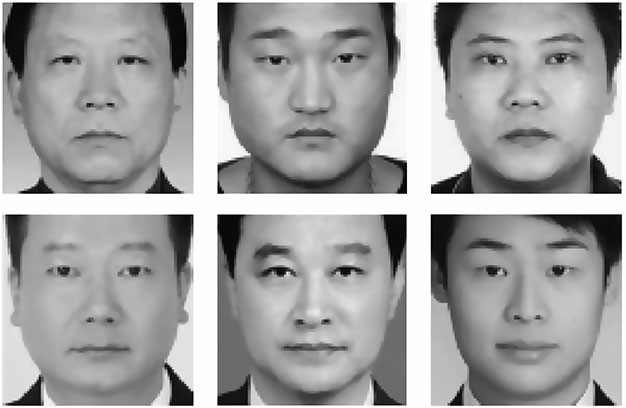

https://medium.com/@blaisea/physiognomys-new-clothes-f2d4b59fdd6a

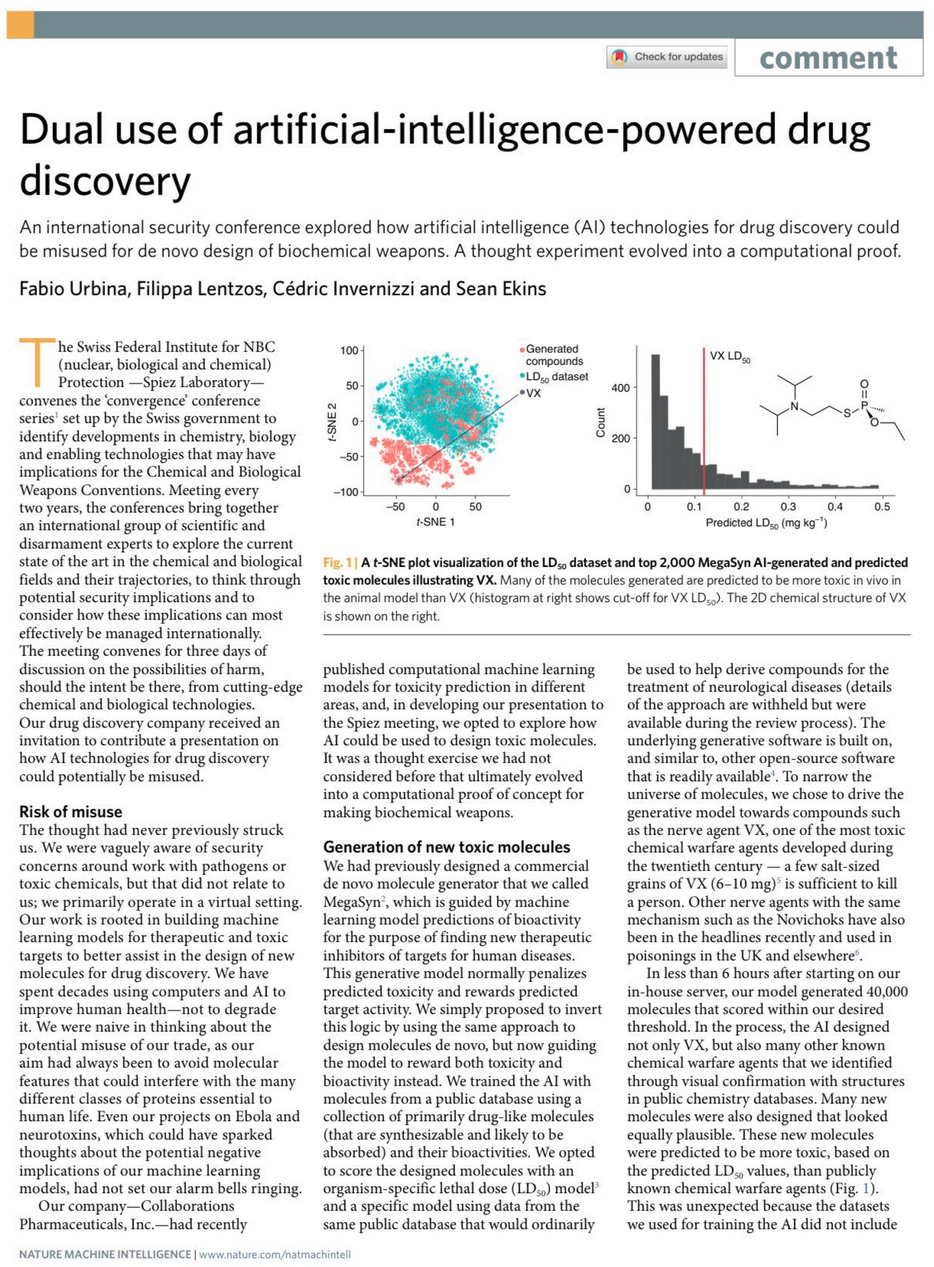

"Wu and Zhang’s sample ‘criminal’ images (top) and ‘non-criminal’ images (bottom)." 2016

To what extent are we training the next generation of pseudoscientists?

To what extent are we training the next generation of pseudoscientists?

Many ethical pitfalls are technical pitfalls.

"Simplistic stereotypes is really not a basis for developing AI, and if your AI is based on this then basically what you're doing is enshrining stereotypes in code." (11:42)

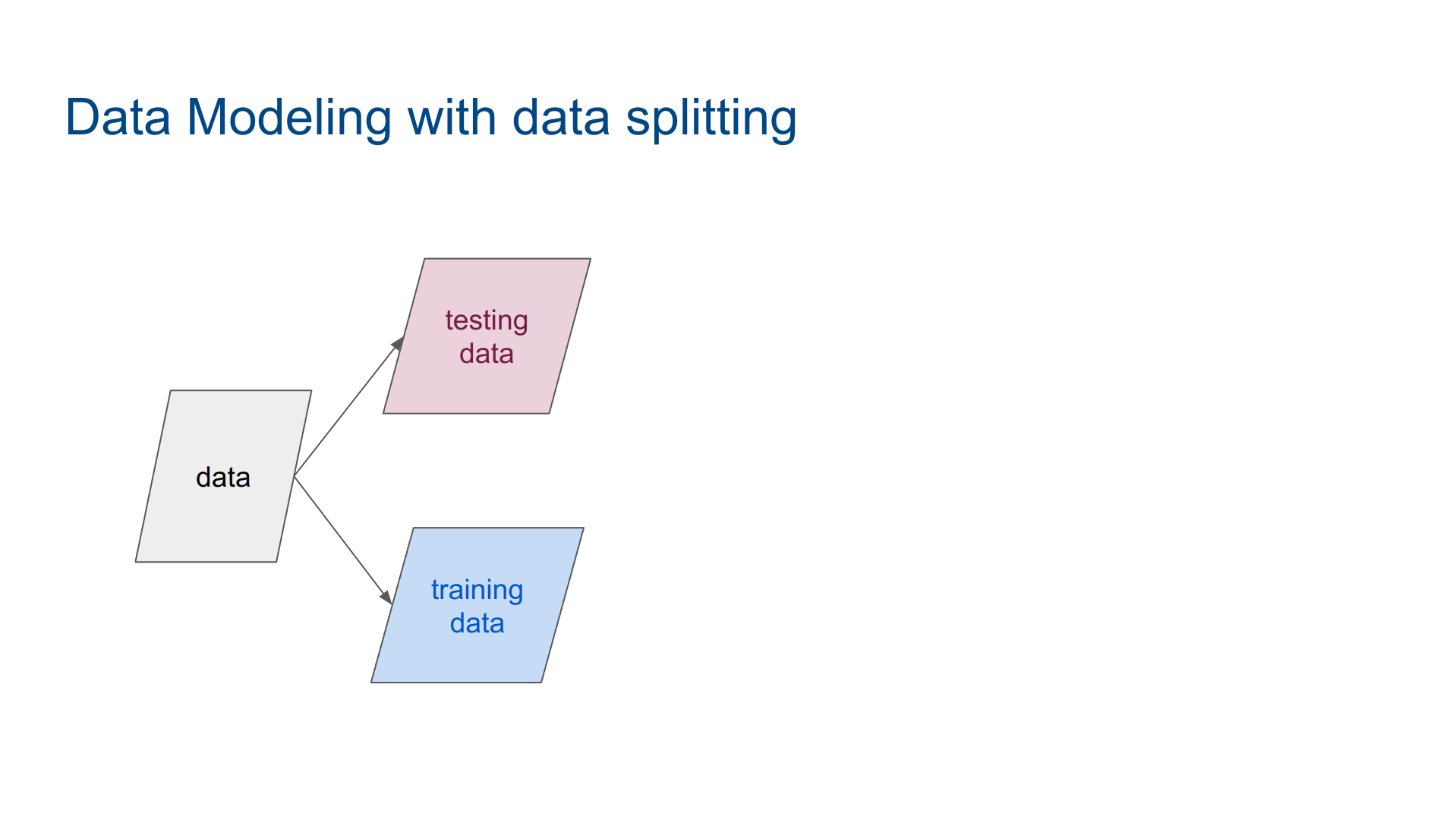

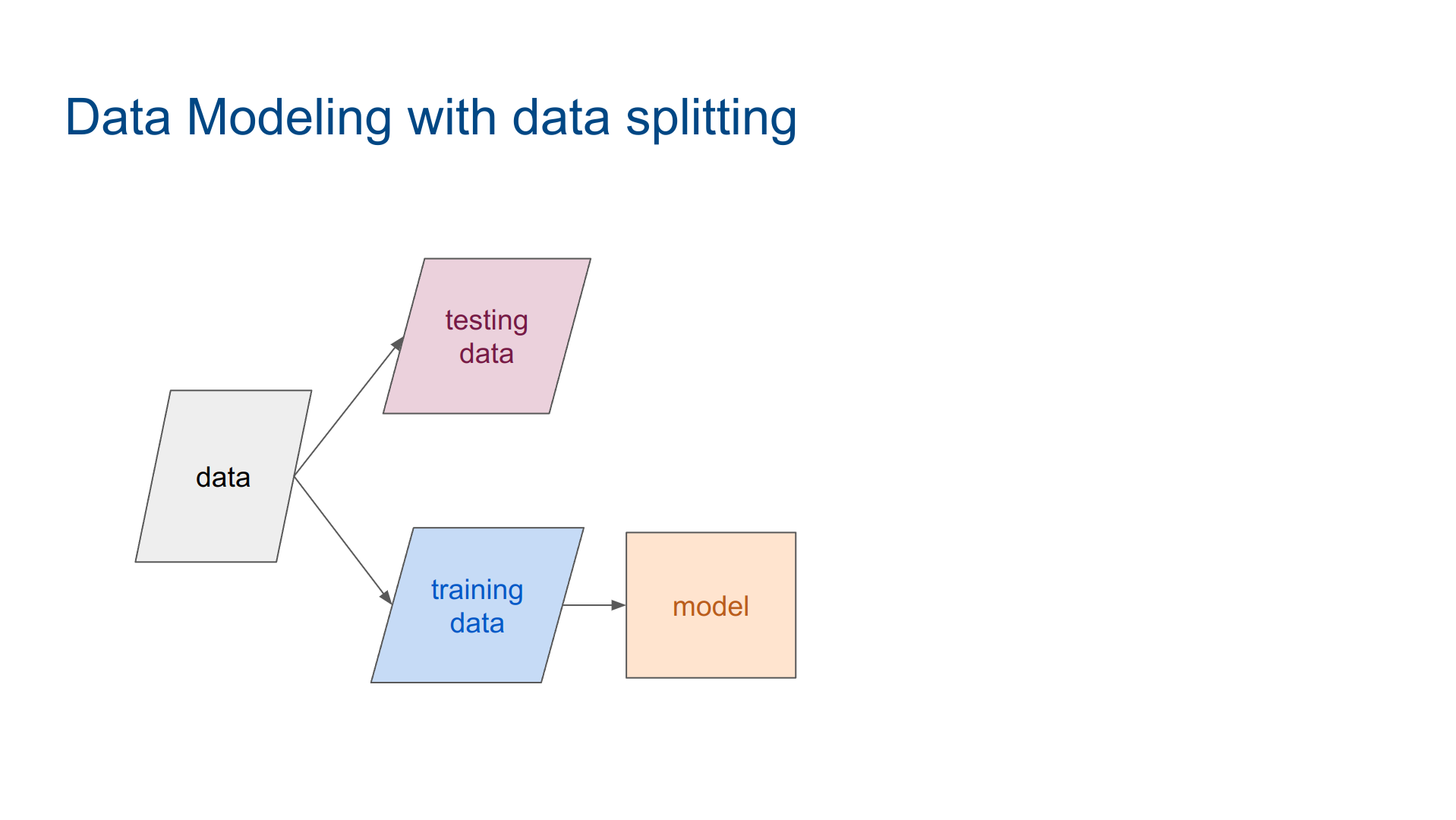

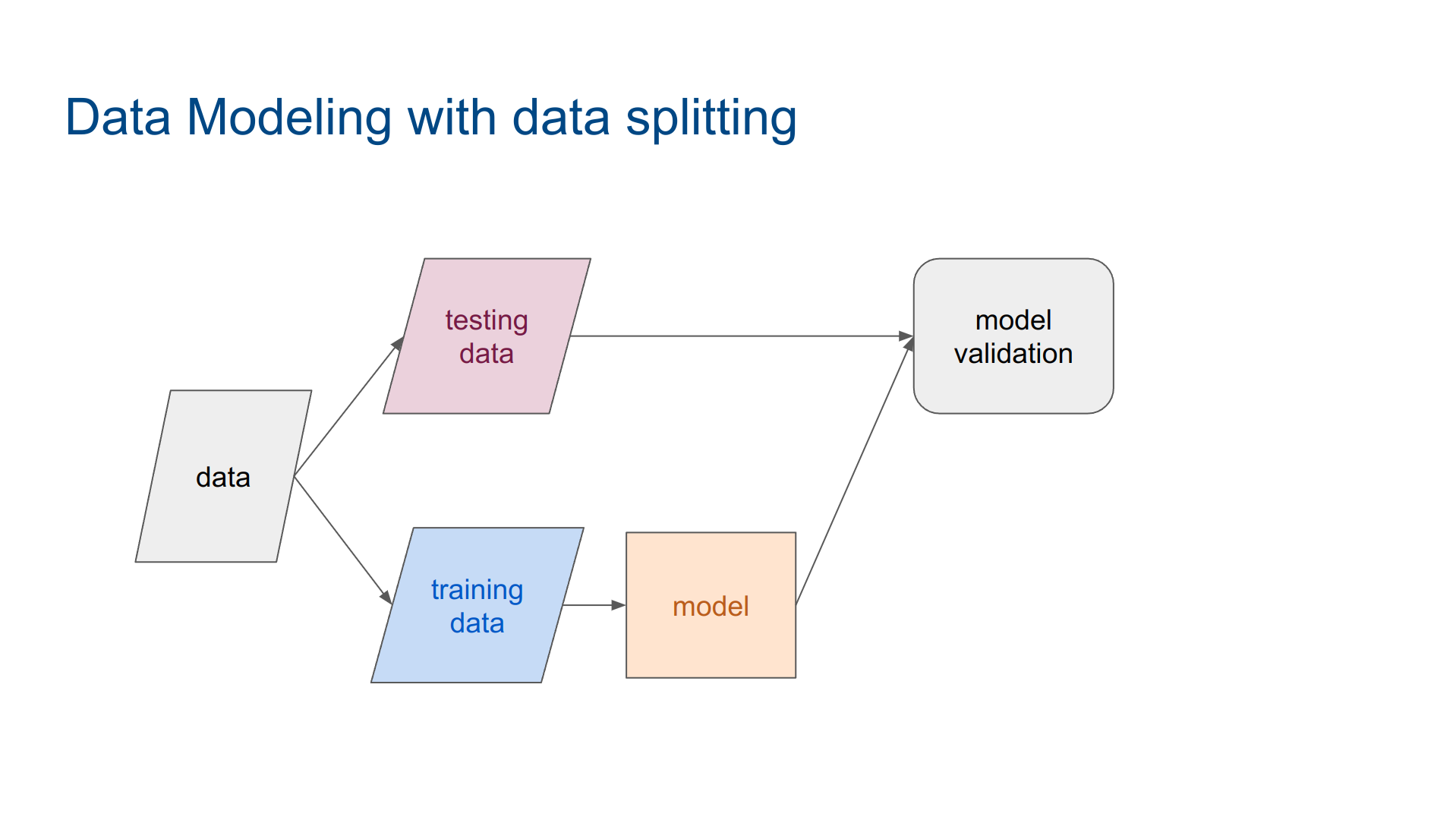

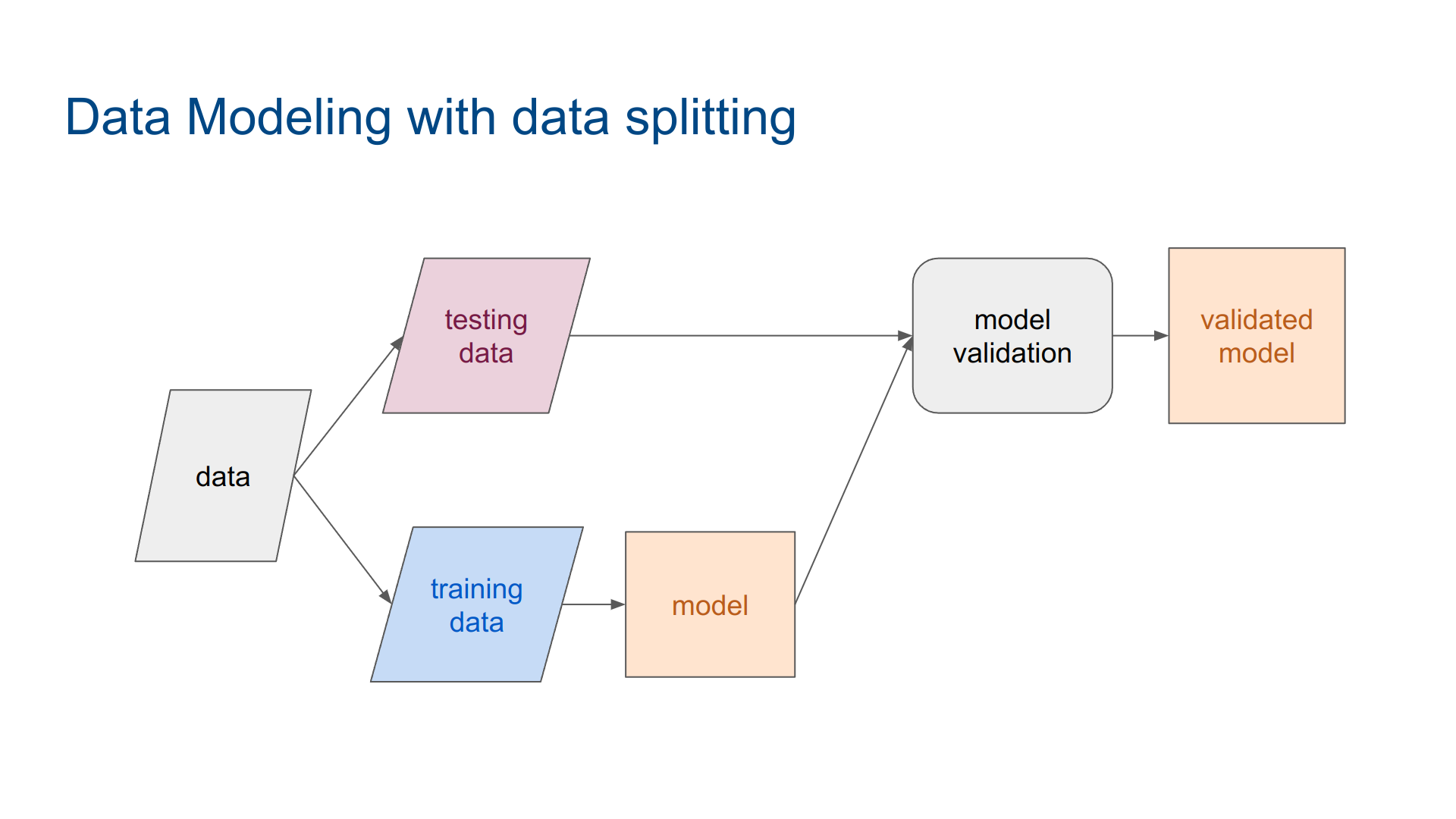

Data Modeling

Data

→

Preprocess

→

Explore

→

Model

→

Communicate

Data Modeling

Environment

→

Data

→

Preprocess

→

Explore

→

Model

→

Communicate

→

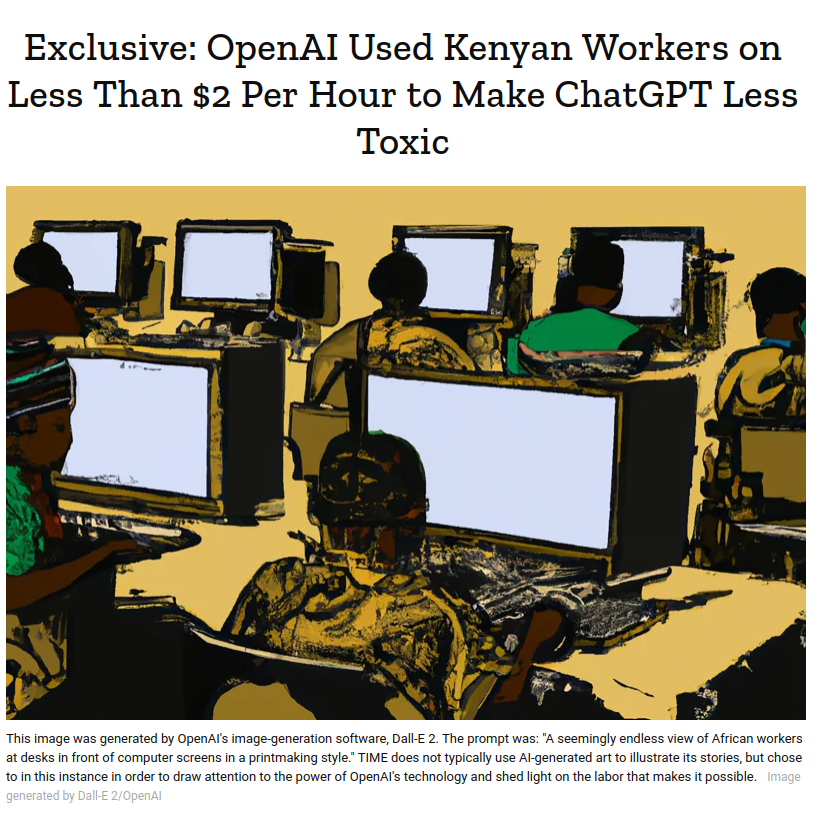

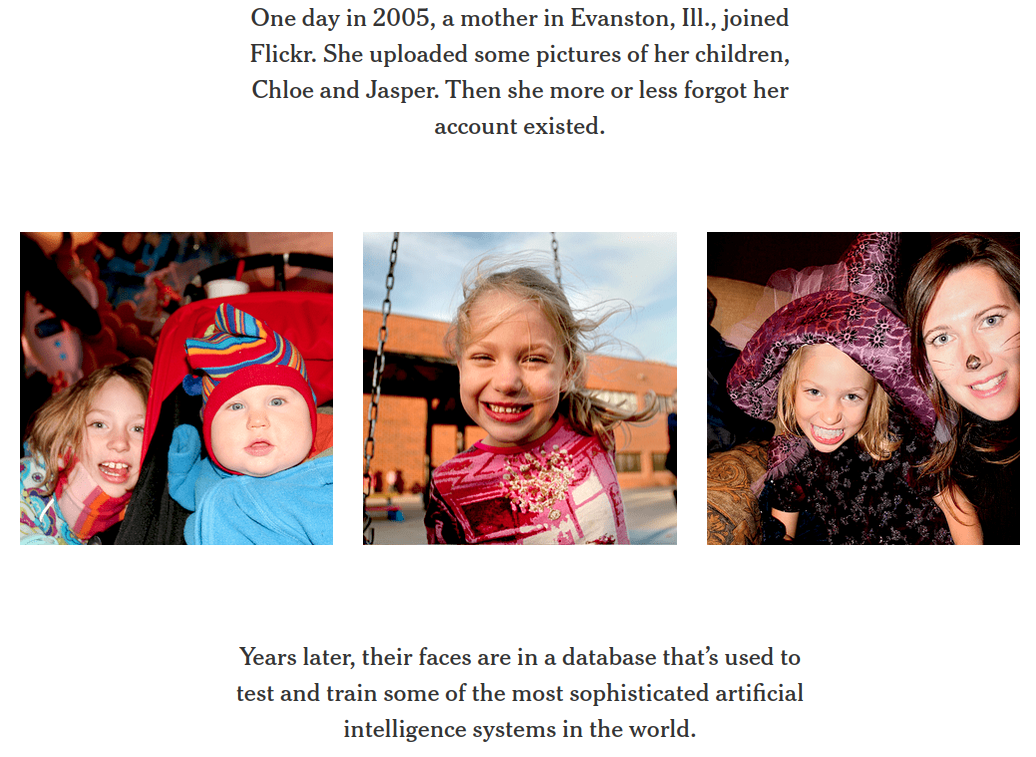

A framework for critical analysis

|

Data

|

• Harmful data collection, lack of consent, insecure / lack of privacy, historical, representational, or measurement bias, ...

|

|

Preprocess

|

• Labor exploitation, labeling by non-experts, incorrect labeling, trauma experienced by labelers, ...

|

|

Explore

|

• Feature selection bias, bias in interpretation of data visualization, data manipulation, feature hacking, ...

|

|

Model

|

• Bias in model choice, model-amplified bias, environmental impact, learning bias, evaluation bias, peripheral modeling, ...

|

|

Communicate

|

• Biased model interpretation, ignoring variance, rejecting model, deploying harmful products, deployment bias, ...

|

|

Meta

|

• "Pernicious feedback loops", runaway homogeneity, susceptability to adversarial attack, lack of oversight or auditing, ...

|

Critical Questions:

|

|

Conclusions

Students should witness math fail. They should experience models performing poorly.

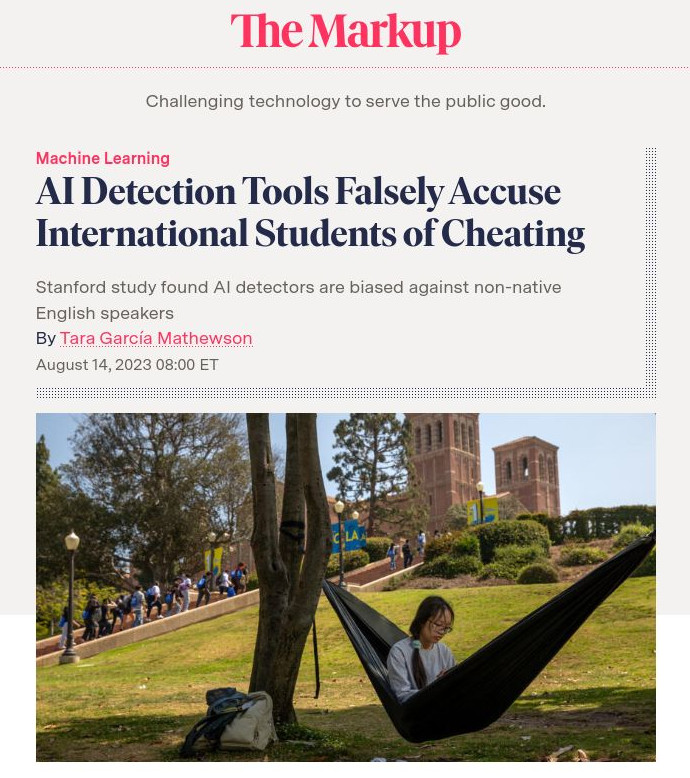

A real "AI education" includes understanding that AI frequently does not work, and knowing that deploying broken systems may cause harm.

Resources

- GenAI & Ethics: Investigating ChatGPT, Gemini, and Copilot - Dr. Torrey Trust

- DAIR Mystery AI Hype Theater 3000

- AI Snake Oil

- AI Now Institute: https://ainowinstitute.org/

- Lighthouse3, AI Ethics Weekly - https://lighthouse3.com/newsletter/

- Rachel Thomas Fast.ai Data Ethics Course

- Ethics in Mathematics Readings - Allison N. Miller

- Automating Ambiguity: Challenges and Pitfalls of Artificial Intelligence - Abeba Birhane

- On the dangers of stochastic parrots: Can language models be too big? - Emily M. Bender, Timnit Gebru, Angelina McMillan-Major, and Margaret Mitchell

- Rachael Tatman - YouTube

- SaTML 2023 - Timnit Gebru - Eugenics and the Promise of Utopia through AGI

- A.I. and Stochastic Parrots | FACTUALLY with Emily Bender and Timnit Gebru

- AIES '22: Proceedings of the 2022 AAAI/ACM Conference on AI, Ethics, and Society https://dl.acm.org/doi/proceedings/10.1145/3514094